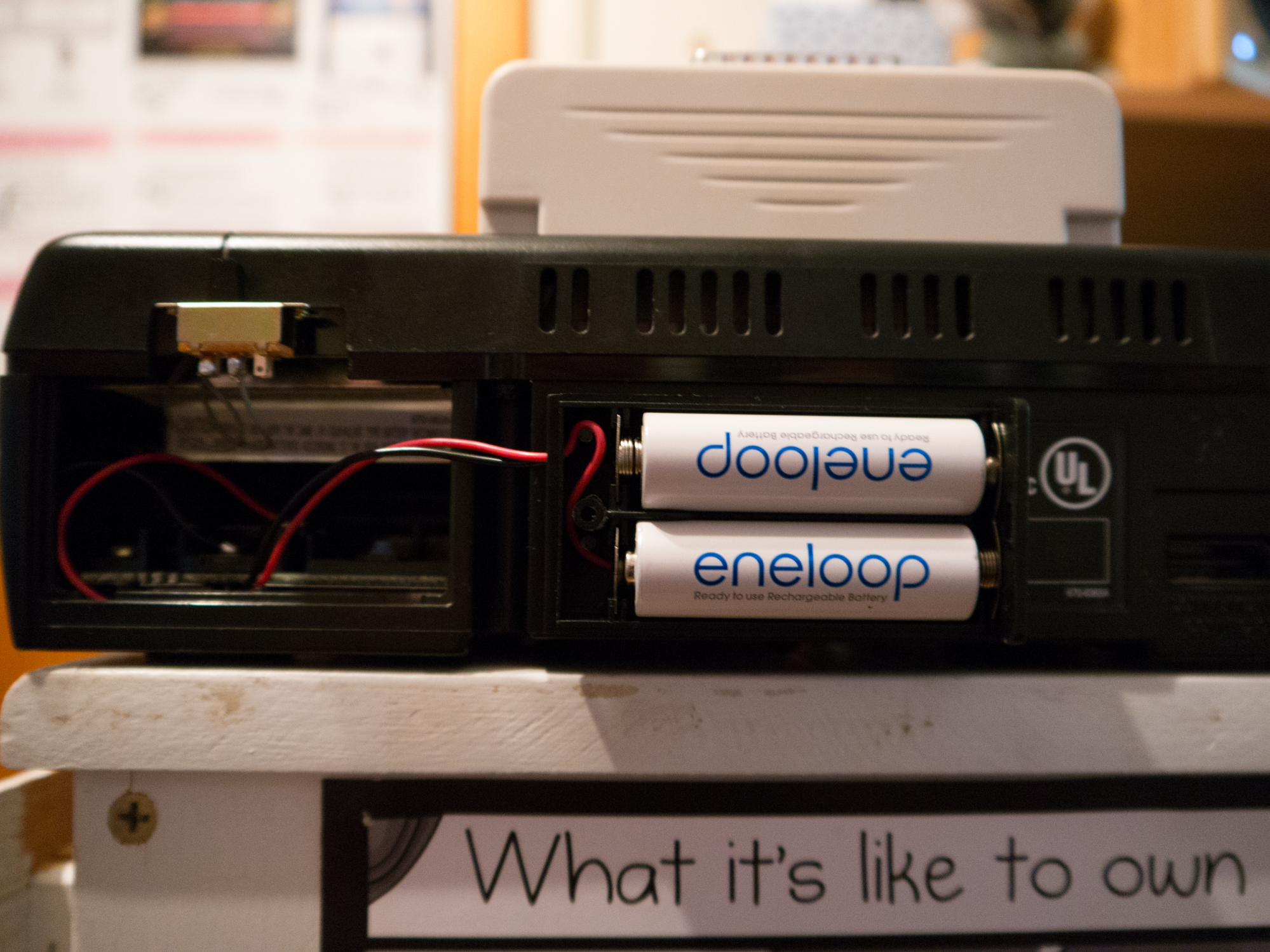

I’ve been cleaning out old boxes of junk and stumbled on my forgotten Sega Saturn console. Sometime during high school I had taken the wires for the CD-ROM door switch and simply twisted them together to enable using the Swap Trick to play backup games. I decided to implement a better fix by soldering an actual on/off switch and also do a mod of the built-in battery. Unlike the Sega Dreamcast that came after, the Sega Saturn does not use a built-in rechargeable battery for memory saves; it uses a CR2032 lithium battery. According to this Sega Saturn Battery FAQ, the CR2032 has a capacity of 230mAh and will last 1-2 years in normal use or 19 days if the console is unplugged. This FAQ also discusses using other lithium batteries with higher capacities than the CR2032; I decided to try using Panasonic Eneloops instead. They are considered one of the best, if not the best, rechargeable AA batteries on the market; Eneloops have a minimum capacity of 1900mAh and can keep their charge while sitting unused for several years.

I went to my local Fry’s Electronics store to pick up a 2 AA battery holder, mini on/off switch, and some 3M mounting tape. The battery mod is straight forward; solder the positive sides of the battery holder and the Saturn’s battery contact together and do the same for the negative sides. If you get a 2 AA battery holder, make sure it’s wired in series so you’re getting 3V from the two AA batteries.

Since the Eneloops have a capacity of at least 1900mAh, it should theoretically last much longer than using a CR2032. I’ve had it running since yesterday July 29th; I will make an update in the future on how well it performs. You could also use AA Lithium batteries instead like these.

I lost the cover to the battery compartment so I just mounted the battery holder where you would normally see the model number of the Sega Saturn. You can also see the mini on/off switch mounted above the battery compartment area. Not the cleanest looking mods but it works.